Premium Only Content

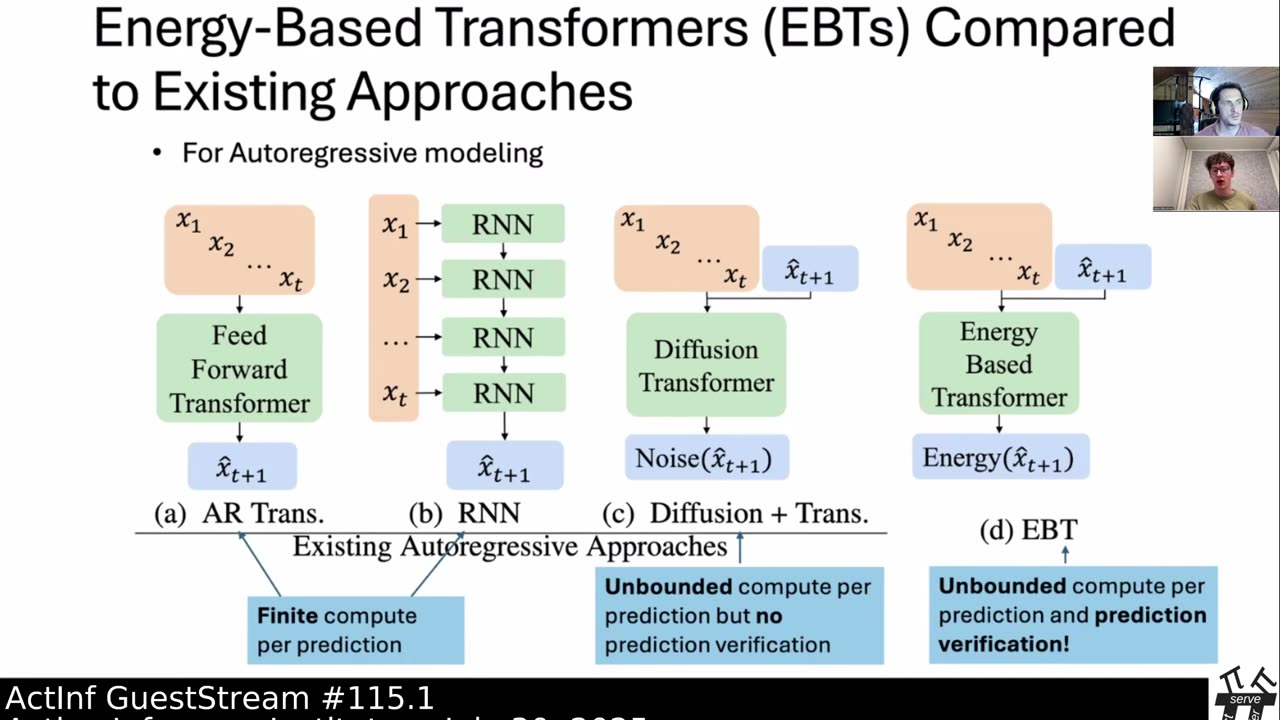

ActInf GuestStream 115.1 ~ Energy-Based Transformers and the Future of Scaling

"Energy-Based Transformers are Scalable Learners and Thinkers"

Alexi Gladstone, Ganesh Nanduru, Md Mofijul Islam, Peixuan Han, Hyeonjeong Ha, Aman Chadha, Yilun Du, Heng Ji, Jundong Li, Tariq Iqbal

https://arxiv.org/abs/2507.02092

Inference-time computation techniques, analogous to human System 2 Thinking, have recently become popular for improving model performances. However, most existing approaches suffer from several limitations: they are modality-specific (e.g., working only in text), problem-specific (e.g., verifiable domains like math and coding), or require additional supervision/training on top of unsupervised pretraining (e.g., verifiers or verifiable rewards). In this paper, we ask the question "Is it possible to generalize these System 2 Thinking approaches, and develop models that learn to think solely from unsupervised learning?" Interestingly, we find the answer is yes, by learning to explicitly verify the compatibility between inputs and candidate-predictions, and then re-framing prediction problems as optimization with respect to this verifier. Specifically, we train Energy-Based Transformers (EBTs) -- a new class of Energy-Based Models (EBMs) -- to assign an energy value to every input and candidate-prediction pair, enabling predictions through gradient descent-based energy minimization until convergence. Across both discrete (text) and continuous (visual) modalities, we find EBTs scale faster than the dominant Transformer++ approach during training, achieving an up to 35% higher scaling rate with respect to data, batch size, parameters, FLOPs, and depth. During inference, EBTs improve performance with System 2 Thinking by 29% more than the Transformer++ on language tasks, and EBTs outperform Diffusion Transformers on image denoising while using fewer forward passes. Further, we find that EBTs achieve better results than existing models on most downstream tasks given the same or worse pretraining performance, suggesting that EBTs generalize better than existing approaches. Consequently, EBTs are a promising new paradigm for scaling both the learning and thinking capabilities of models.

Subjects: Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Computer Vision and Pattern Recognition (cs.CV)

Cite as: arXiv:2507.02092 [cs.LG]

(or arXiv:2507.02092v1 [cs.LG] for this version)

https://doi.org/10.48550/arXiv.2507.02092

Active Inference Institute information:

Website: https://www.activeinference.institute/

Activities: https://activities.activeinference.institute/

Discord: https://discord.activeinference.institute/

Donate: http://donate.activeinference.institute/

YouTube: https://www.youtube.com/c/ActiveInference/

X: https://x.com/InferenceActive

Active Inference Livestreams: https://video.activeinference.institute/

-

1:42:46

1:42:46

Active Inference Institute

8 hours agoActive InferAnt Stream 016.2 ~ Generative Education: What Will We Do With Our Faculties?

61 -

6:01:38

6:01:38

Right Side Broadcasting Network

2 days agoLIVE: President Trump to Deliver Remarks in Rocky Mount, NC - 12/19/25

97.1K52 -

LIVE

LIVE

VapinGamers

2 hours ago $0.03 earnedDestiny 2 - Dungeons and Loot with Friends - !rumbot !music

1,872 watching -

2:07:44

2:07:44

TimcastIRL

3 hours agoTrump's Secret Plan To Make Charlie Kirk VP, America Fest IN CIVIL WAR | Timcast IRL

176K117 -

LIVE

LIVE

I_Came_With_Fire_Podcast

11 hours agoLive Fire: Christmas Special

201 watching -

46:26

46:26

Sarah Westall

5 hours agoWhat’s Behind the Silver Surge? Large Institutions Cashing In w/ Andy Schectman

12.9K -

6:42:10

6:42:10

Turning Point USA

10 hours agoLIVE NOW: AMFEST DAY 2 - VIVEK, JACK POSOBIEC, MEGYN KELLY, ALEX CLARK AND MORE…

1.15M176 -

DVR

DVR

Flyover Conservatives

21 hours agoHow to Win 2026 Before It Starts — Clay Clark’s Goal-Setting Blueprint | FOC Show

10.9K1 -

12:52

12:52

The Kevin Trudeau Show Limitless

2 days agoBeyond Good And Bad: The Hidden Reality Code

40.2K20 -

1:03:11

1:03:11

BonginoReport

6 hours agoBrown U Security Failures EXPOSED - Nightly Scroll w/ Hayley Caronia (Ep.201)

127K38