Premium Only Content

Run Stable Diffusion Locally on AMD Instinct MI60 Linux Live Setup

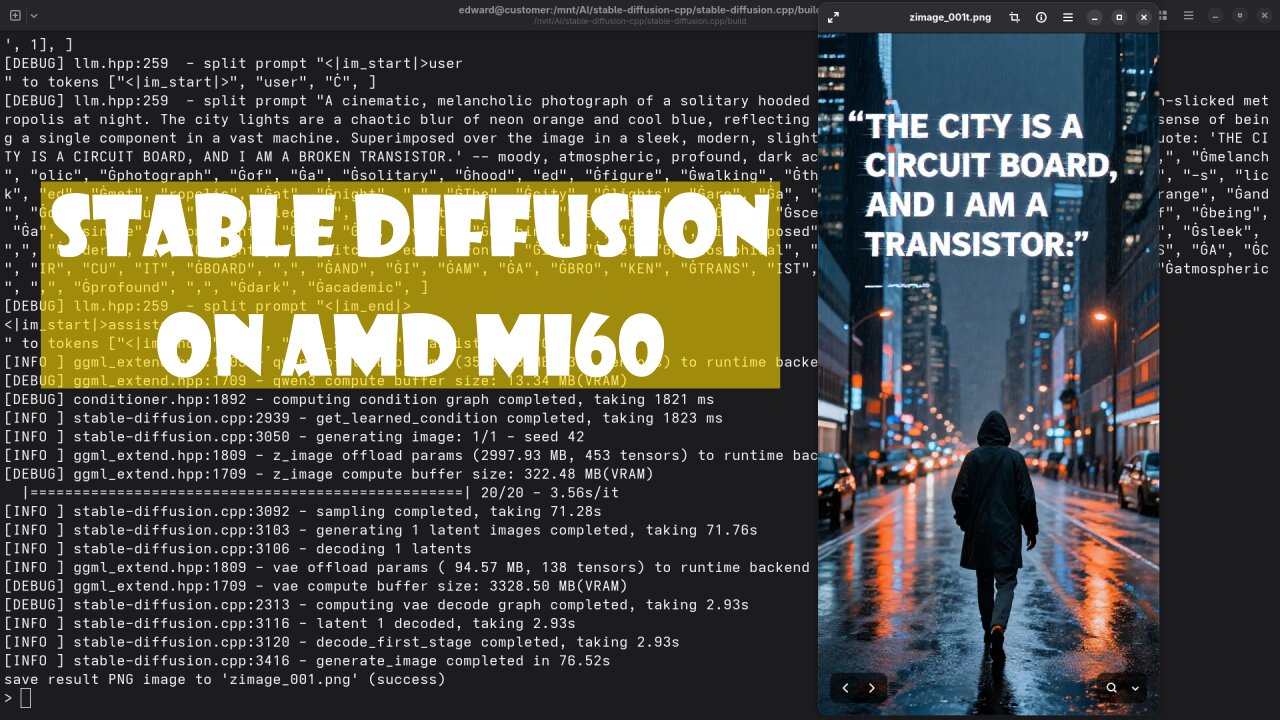

In this live screencast, I demonstrate how to run stable diffusion cpp with the Z Image Turbo Q3 K GGUF 6B model on Linux using an AMD Instinct MI60 GPU with 32GB HBM2 memory.

This session is beginner friendly and focuses on real world setup, configuration, and inference performance using an AMD data center GPU. You will see how GGUF models work with stable diffusion cpp, how ROCm detects the MI60, and how images are generated locally without cloud services.

This setup is ideal for developers, AI enthusiasts, and Linux users who want fast local image generation using open source tools and Apache 2.0 licensed models.

Blog article with full written guide

https://ojambo.com/review-generative-ai-z-image-turbo-q3-K-gguf-6b-model

Learning Python book on Amazon

https://www.amazon.com/Learning-Python-Programming-eBook-Beginners-ebook/dp/B0D8BQ5X99

Learning Python course

https://ojamboshop.com/product/learning-python

One on one online Python tutoring

https://ojambo.com/contact

Z Image Turbo installation or migration services

https://ojamboservices.com/contact

If you find this video helpful, consider liking the video, subscribing to the channel, and sharing it with others interested in local AI and Linux GPU workflows.

#StableDiffusion #StableDiffusionCPP #AMDInstinct #AMDMI60 #LinuxAI #GenerativeAI #ImageGeneration #GGUF #OpenSourceAI #ROCm #LocalAI

-

30:19

30:19

OjamboShop

2 days agoBuild Interactive HTML5 Elastic Spring Animations with JavaScript

31 -

2:02:44

2:02:44

megimu32

6 hours agoON THE SUBJECT: CHRISTMAS CORE MEMORIES

36.7K6 -

2:16:09

2:16:09

DLDAfterDark

5 hours ago $3.69 earnedThe Very Merry HotDog Waffle Christmas Stream! Gun Talk - God, Guns, and Gear

31.2K7 -

1:19:51

1:19:51

Tundra Tactical

16 hours ago $13.54 earnedThursday Night Gun Fun!!! The Worlds Okayest Gun Show

52.4K -

55:11

55:11

Sarah Westall

1 day agoHumanity Unchained: The Awakening of the Divine Feminine & Masculine w/ Dr. Brianna Ladapo

42.5K6 -

1:42:41

1:42:41

Glenn Greenwald

11 hours agoReaction to Trump's Primetime Speech; Coldplay "Adultery" Couple Reappears for More Shame; Australia and the UK Obey Israel's Censorship Demands | SYSTEM UPDATE #560

148K89 -

2:46:41

2:46:41

Barry Cunningham

9 hours agoBREAKING NEWS: President Trump Signs The National Defense Authorization Act | More News!

60.3K30 -

43:10

43:10

Donald Trump Jr.

10 hours agoThe Days of Destructive DEI are Over, Plus Full News Coverage! | TRIGGERED Ep.301

121K89 -

52:07

52:07

BonginoReport

10 hours agoThe Internet Picks Bongino’s FBI Replacement - Nightly Scroll w/ Hayley Caronia (Ep.200)

114K79 -

55:30

55:30

Russell Brand

11 hours agoStay Free LIVE from AmFest — Turning Point USA - SF665

139K14