Premium Only Content

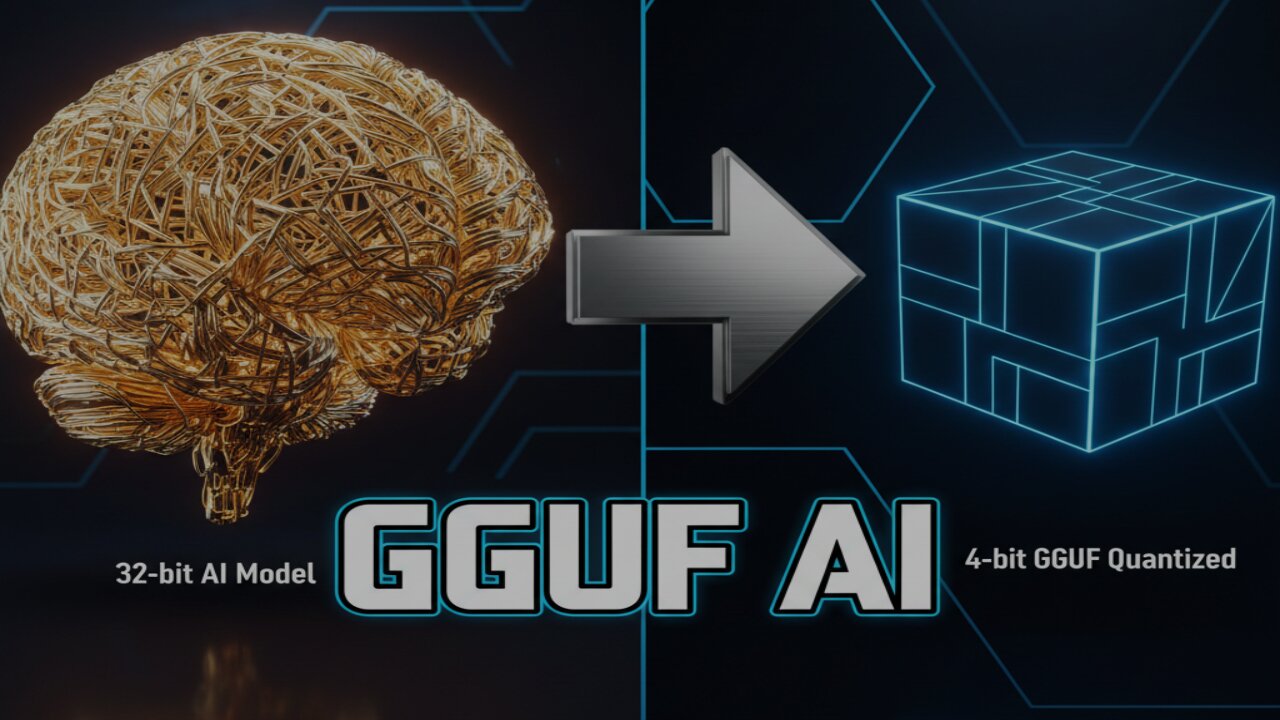

Local AI Basics: GGUF Quantization And Llama.cpp Explained

In this screencast you will explore the inner workings of local AI without needing to write complex code. You will learn the difference between Safetensors and GGUF file formats and how quantization makes large models fit on home hardware. We also demonstrate how llama.cpp acts as a powerful engine for private AI on Fedora Linux. This video is perfect for beginners who want to understand the architecture behind their favorite local AI tools.

Read the full blog article here: https://ojambo.com/understanding-local-ai-architecture-gguf-and-quantization

Take Your Skills Further:

Books: https://www.amazon.com/stores/Edward-Ojambo/author/B0D94QM76N

Online Courses: https://ojamboshop.com/product-category/course

One-on-One Tutorials: https://ojambo.com/contact

Consultation Services: https://ojamboservices.com/contact

#AI #MachineLearning #Linux #LlamaCPP #GGUF #Quantization #OpenSource #Privacy

-

1:07:08

1:07:08

OjamboShop

1 day agoAccess Your Desktop Anywhere With Apache Guacamole

111 -

LIVE

LIVE

LFA TV

14 hours agoLIVE & BREAKING NEWS! | FRIDAY 2/13/26

3,265 watching -

1:32:32

1:32:32

Graham Allen

3 hours agoWill Epstein Cost Us the MIDTERMS??! + It's a New World and It's Time For Us To Fight the RIGHT Way!

141K229 -

20:03

20:03

Clintonjaws

11 hours ago $4.94 earnedTV Host Explodes At Woke Guest For Defending Men on Women's Toilets!

4.01K14 -

LIVE

LIVE

Caleb Hammer

3 hours agoThe Most Delusional Woman In Financial Audit History

242 watching -

LIVE

LIVE

The Big Mig™

2 hours agoTo The Moon Or Bust, Elon Musk Says We're Going To The Moon

3,167 watching -

1:04:26

1:04:26

BonginoReport

13 hours agoDems Hold Senate Hostage Over ICE Funding | Episode 225 – (02/13/26) VINCE

137K85 -

1:10:37

1:10:37

Chad Prather

16 hours agoClear Eyes, Clean Hearts: The Kingdom Way of Judgment

83.9K35 -

LIVE

LIVE

Badlands Media

8 hours agoBadlands Daily: 2/13/26

3,461 watching -

1:26:40

1:26:40

Connor Tomlinson

6 hours ago“Starmer Has Blood on His Hands”

6.86K4