Premium Only Content

This video is only available to Rumble Premium subscribers. Subscribe to

enjoy exclusive content and ad-free viewing.

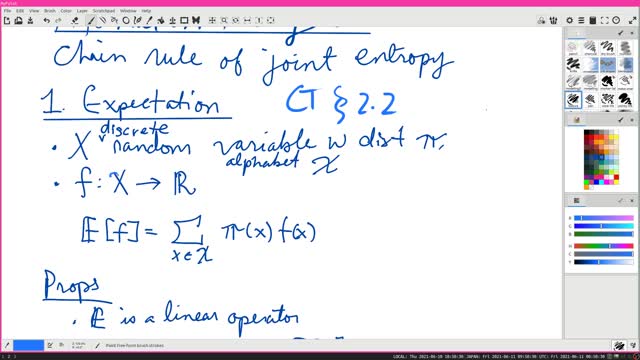

Chain Rule of Joint Entropy | Information Theory 5 | Cover-Thomas Section 2.2

4 years ago

17

H(X, Y) = H(X) + H(Y | X). In other words, the entropy (= uncertainty) of two variables is the entropy of one, plus the conditional entropy of the other. In particular, if the variables are independent, then the uncertainty of two independent variables is the sum of the two uncertainties.

#InformationTheory #CoverThomas

Loading comments...

-

15:22

15:22

Dr. Ajay Kumar PHD (he/him)

3 years agoBitcoin is Triangles | Euclid's Elements Book 1 Proposition 37

13 -

57:16

57:16

Calculus Lectures

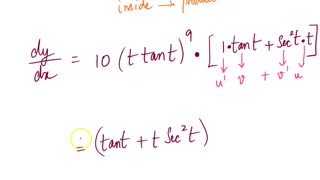

5 years agoMath4A Lecture Overview MAlbert CH3 | 6 Chain Rule

82 -

2:40

2:40

KGTV

4 years agoPatient information compromised

361 -

5:29

5:29

Gamazda

11 hours ago $0.15 earnedMetallica - Nothing Else Matters (Live Piano in a Church)

4043 -

2:50:56

2:50:56

The Confessionals

19 hours agoHe Killed a Monster (Then They Told Him to Stay Silent)

8082 -

41:42

41:42

Brad Owen Poker

13 hours agoMy BIGGEST WIN EVER!! $50,000+ In DREAM Session!! Must See! BEST I’ve Ever Run! Poker Vlog Ep 360

27 -

17:53

17:53

The Illusion of Consensus

17 hours agoWhat Women REALLY Want in Public (It’s Not What You Think) | Geoffrey Miller

73 -

1:32:16

1:32:16

Uncommon Sense In Current Times

16 hours ago $0.02 earnedPolygyny Debate: The Biblical Case For and Against Plural Marriage | Uncommon Sense

3.94K -

LIVE

LIVE

BEK TV

22 hours agoTrent Loos in the Morning - 12/17/2025

159 watching -

23:42

23:42

Athlete & Artist Show

4 days ago $0.01 earnedWorld Junior Invites & Snubs, NHL Threatens To Pull Out Of Olympics

1.58K