Premium Only Content

This video is only available to Rumble Premium subscribers. Subscribe to

enjoy exclusive content and ad-free viewing.

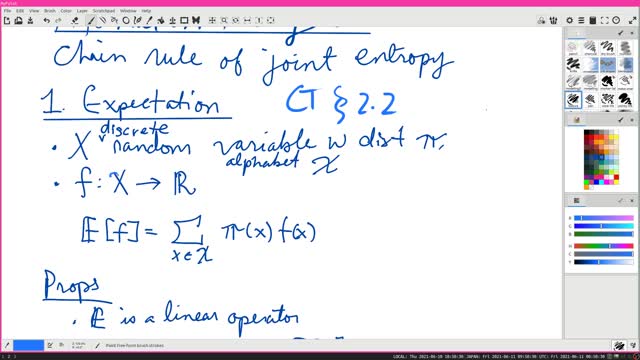

Chain Rule of Joint Entropy | Information Theory 5 | Cover-Thomas Section 2.2

4 years ago

15

H(X, Y) = H(X) + H(Y | X). In other words, the entropy (= uncertainty) of two variables is the entropy of one, plus the conditional entropy of the other. In particular, if the variables are independent, then the uncertainty of two independent variables is the sum of the two uncertainties.

#InformationTheory #CoverThomas

Loading comments...

-

31:02

31:02

Dr. Ajay Kumar PHD (he/him)

3 years agoN

35 -

57:16

57:16

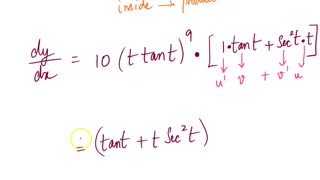

Calculus Lectures

4 years agoMath4A Lecture Overview MAlbert CH3 | 6 Chain Rule

80 -

2:40

2:40

KGTV

4 years agoPatient information compromised

341 -

LIVE

LIVE

LFA TV

4 hours agoLIVE & BREAKING NEWS! | THURSDAY 10/2/25

3,741 watching -

UPCOMING

UPCOMING

Chad Prather

14 hours agoWhen God Delays: Trusting Jesus in the Waiting Room of Life

24.7K6 -

The Chris Salcedo Show

13 hours ago $6.91 earnedThe Democrat's Schumer Shutdown

19K2 -

30:32

30:32

Game On!

17 hours ago $2.90 earned20,000 Rumble Followers! Thursday Night Football 49ers vs Rams Preview!

26.5K3 -

1:26

1:26

WildCreatures

14 days ago $3.26 earnedCow fearlessly grazes in crocodile-infested wetland

29.3K3 -

29:54

29:54

DeVory Darkins

1 day ago $16.82 earnedHegseth drops explosive speech as Democrats painfully meltdown over Trump truth social post

76.3K78 -

19:39

19:39

James Klüg

1 day agoAnti-Trump Protesters Threaten To Pepper Spray Me For Trying To Have Conversations

41.3K28