Premium Only Content

This video is only available to Rumble Premium subscribers. Subscribe to

enjoy exclusive content and ad-free viewing.

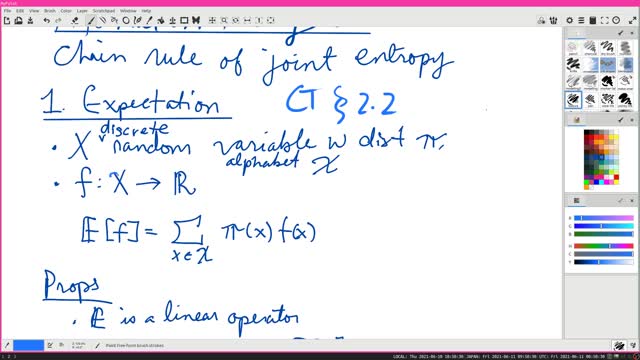

Chain Rule of Joint Entropy | Information Theory 5 | Cover-Thomas Section 2.2

4 years ago

17

H(X, Y) = H(X) + H(Y | X). In other words, the entropy (= uncertainty) of two variables is the entropy of one, plus the conditional entropy of the other. In particular, if the variables are independent, then the uncertainty of two independent variables is the sum of the two uncertainties.

#InformationTheory #CoverThomas

Loading comments...

-

12:26

12:26

Dr. Ajay Kumar PHD (he/him)

3 years agoBitcoin is Obvious | Euclid's Elements Book 1 Prop 41

13 -

57:16

57:16

Calculus Lectures

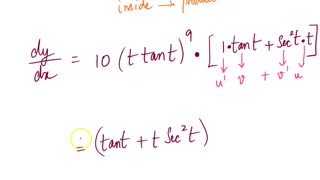

5 years agoMath4A Lecture Overview MAlbert CH3 | 6 Chain Rule

82 -

2:40

2:40

KGTV

4 years agoPatient information compromised

361 -

1:02:04

1:02:04

VINCE

2 hours agoAre We Really Being Told The Full Story? | Episode 190 - 12/17/25 VINCE

156K73 -

LIVE

LIVE

The Bubba Army

22 hours agoTRUMP'S CHIEF OF STAFF RUNNING WILD! - Bubba the Love Sponge® Show | 12/17/25

507 watching -

1:09:13

1:09:13

Graham Allen

3 hours agoTrump To Address The Nation! + Candace Bends The Knee, The Cult Turns On Her!

50.6K402 -

LIVE

LIVE

Wendy Bell Radio

6 hours agoIs She Even An American?

6,247 watching -

1:14:09

1:14:09

Chad Prather

16 hours agoHow to Recognize When God Has Already Answered Your Prayer

36.3K21 -

1:33:59

1:33:59

Game On!

20 hours ago $4.15 earnedBIGGEST 2025 College Football Playoff 1st Round BETS NOW!

43.6K2 -

1:04:39

1:04:39

Crypto Power Hour

13 hours ago $7.21 earnedState of Early Stage Crypto Investor Rob Good

66.7K9