Premium Only Content

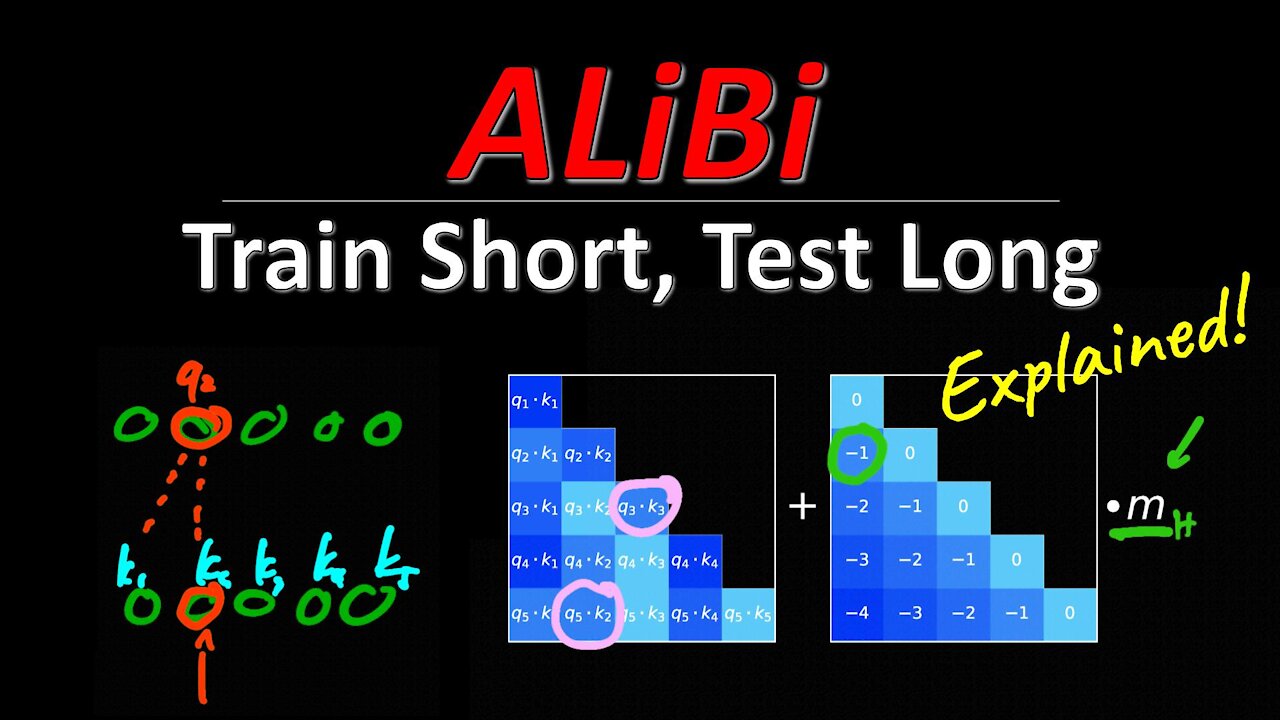

ALiBi - Train Short, Test Long: Attention with linear biases enables input length extrapolation

#alibi #transformers #attention

Transformers are essentially set models that need additional inputs to make sense of sequence data. The most widespread additional inputs are position encodings or position embeddings, which add sequence index information in various forms. However, this has put a limit on the resulting model, which cannot run inference on sequences longer than it has been trained on, as it would encounter unfamiliar position encodings. ALiBi solves this by proposing simple linear fixed biases as position information, adding negligible overhead in time and memory, but surprisingly, the resulting model is able to handle inference on sequences many times as long as its training sequences.

OUTLINE:

0:00 - Intro & Overview

1:40 - Position Encodings in Transformers

4:55 - Sinusoidial Position Encodings

11:50 - ALiBi Position Encodings

20:50 - How to choose the slope parameter

23:55 - Experimental Results

29:10 - Comments & Conclusion

Paper: https://ofir.io/train_short_test_long...

Code: https://github.com/ofirpress/attentio...

Abstract:

Since the introduction of the transformer model by Vaswani et al. (2017), a fundamental question remains open: how to achieve extrapolation at inference time to longer sequences than seen during training? We first show that extrapolation can be improved by changing the position representation method, though we find that existing proposals do not allow efficient extrapolation. We introduce a simple and efficient method, Attention with Linear Biases (ALiBi), that allows for extrapolation. ALiBi does not add positional embeddings to the word embeddings; instead, it biases the query-key attention scores with a term that is proportional to their distance. We show that this method allows training a 1.3 billion parameter model on input sequences of length 1024 that extrapolates to input sequences of length 2048, achieving the same perplexity as a sinusoidal position embedding model trained on inputs of length 2048, 11% faster and using 11% less memory. ALiBi’s inductive bias towards recency allows it to outperform multiple strong position methods on the WikiText-103 benchmark. Finally, we provide analysis of ALiBi to understand why it leads to better performance.

Authors: Ofir Press, Noah A. Smith, Mike Lewis

Links:

TabNine Code Completion (Referral): http://bit.ly/tabnine-yannick

YouTube: https://www.youtube.com/c/yannickilcher

Twitter: https://twitter.com/ykilcher

Discord: https://discord.gg/4H8xxDF

BitChute: https://www.bitchute.com/channel/yann...

Minds: https://www.minds.com/ykilcher

Parler: https://parler.com/profile/YannicKilcher

LinkedIn: https://www.linkedin.com/in/yannic-ki...

BiliBili: https://space.bilibili.com/1824646584

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: https://www.subscribestar.com/yannick...

Patreon: https://www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

-

4:29

4:29

Sheriff Mike

4 years agoThe Mile Long Coal Train, Fly with Mike

38 -

2:32

2:32

The PyTorch Channel

4 years agoTutorial 1: Train a simple linear regression model

49 -

4:52

4:52

CAMINOJOURNEY

4 years agoHow To Train Your Dog To Pay Attention

25 -

![THIS GAME IS SO OLD :: Half-Life (1998) :: FINISHING IT TODAY [FIRST TIME PLAYING] {18+}](https://1a-1791.com/video/fww1/5e/s8/1/A/6/a/y/A6ayz.0kob-small-THIS-GAME-IS-SO-OLD-Half-Li.jpg) LIVE

LIVE

a12cat34dog

2 hours agoTHIS GAME IS SO OLD :: Half-Life (1998) :: FINISHING IT TODAY [FIRST TIME PLAYING] {18+}

78 watching -

10:23

10:23

Forrest Galante

11 hours agoAsking an Indian Billionaire Why He Is Saving 1 Million Animals

88.9K24 -

LIVE

LIVE

LexTronic

1 hour agoEditing Photos from DreakHack

50 watching -

LIVE

LIVE

DoldrumDan

1 hour agoSEKIRO DAY 18 FIRST PLAYTHROUGH - DAY 37 NEW LIFE

28 watching -

23:30

23:30

Lady Decade

23 hours ago $20.43 earnedYakuza Kiwami 3 is Causing Outrage !

50.5K13 -

LIVE

LIVE

Lofi Girl

3 years agolofi hip hop radio 📚 - beats to relax/study to

148 watching -

Pepkilla

2 hours agoArc Raiders First Try Send HALLLPPPP

3521